Jon Simpson

AAAI Spring Symposium 2007: Robots and Robot Venues

Along with Matt and Christian, I was able to attend and take part in the ‘Robots and Robot Venues’ track at the AAAI Spring Symposium at Stanford in Palo Alto, California. It proved to be an extremely interesting three days, spending time around others working with educational robotics platforms and gaining an insight into some of the challenges that go with large-scale robotics projects.

It was interesting to talk to Howard Gordon of Surveyor corp, creator of the SRV-1 robotics platform. The SRV-1 offers an useful and rugged platform, very similar in scale to a LEGO Mindstorms RCX tracked robot, but offering a solid metal construction and more in the way of computational ability. (Including the ability to print to a terminal, which after several months working with the four digit display of the Mindstorms RCX, is extremely refreshing.)

The SRV-1 is designed with the intention that it will sense primarily through a camera interface (via blobfinding, or motion tracking), although it is equipped with a set of infra-red sensors for close range proximity detection. It represents a significantly different platform in terms of input from a typical RCX configuration, and the camera interface is not something that we have significantly explored to this point. Christian did a great job over the course of a day and a half in porting the Transterpreter to the Surveyor.

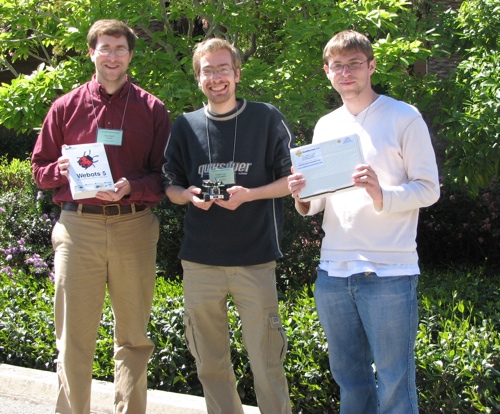

The final component of the conference was a one hour robotics competition, in which Matt and I made use of the platform work done by Christian to attempt a behavioural control solution to the challenge, employing a 3-layer subsumption architecture written in occam-pi. We got a long way there, and managed to win the competition despite having only 20-30 minutes to write the program (after infrastructure work). Our attempt at a subsumption architecture was actually slightly incorrect, and a minor logic error prevented a much better performance. However, it was great to put a slice of the work that has been achieved in the project forward at the conference for others to see, and I felt it opened my eyes to what’s going on in this area in the wider community. Howard wrote up the competition process on his blog, and I’ve borrowed one of his (excellent) pictures below.

To the victors go the spoils. Left to right: Matt Jadud, Christian Jacobsen and Myself.

Other high points of the conference included Fred Martin’s ‘Real Robots Don’t Drive Straight’ talk, and the ensuing discussion around behavioural control over dead reckoning (which seems to be emergent from a lot of robotics work). The idea of introducing a degree of entropy into robotics competitions like the FIRST LEGO League was especially interesting, given my previous experiences with approaches to these problems. The Blackfin Handyboard was a pretty amazing piece of kit to behold after a year of low-level work on the Mindstorms RCX, and it was interesting to see the growing level of interest in the Mindstorms NXT amongst the other participants.

Certainly I took away a large amount of new information and ideas, especially surrounding the application of AI techniques on the robotics platforms that there are foundations for as part of the Transterpreter project. A large number of others in the field are using a ‘tethered’ approach with robots whose computational power (in some cases, up to a 400MHz XScale processor) is used merely to proxy to a PC. Our approach using the Transterpreter to put a small embedded software stack on the robot, allowing it to run its own control algorithms and providing a fully autonomous robot, seems to be very much at odds with this common approach.

The conference was a great experience, and I really enjoyed all of the interactions over the three days with a large number of people.